The rise of AI technology is popular. Corporations jump on the train to provide services for customers’ needs. Humans chat with AI for information they need to solve problems or make decisions. Others have conversations with it for answers or other reasons, but are the answers trustworthy and the reasons reasonable? These questions bring up ethical concerns between humans and AI, as well as between humans and corporations.

A recent poll shows that the majority of Americans are concerned about the safety and fair use of AI. Seventy-eight percent of Americans, compared to 48%, were worried that AI would be utilized for malignant purposes, which is expected. AI has been trained to have bias rather than play fair and square in the political realm. An example is the analysis of the new ChatGPT by researcher David Rozado. His analysis, regardless of the limited data, demonstrates that it responded with left-wing answers to political questions. Even though the ChatGPT claimed not to take any political sides and to provide information based on facts, this was hardly the case.

Other examples include Google’s assist-writing feature and the adjustment of ChatGPT’s viewpoints. Google Doc wanted to make the writing more politically correct, which included inclusive languages. Microsoft-backed machine learning adjusted the consideration of conservative views, such as the definition of a woman, and the list of basic critiques of critical race theory. However, it still leaned toward left-wing viewpoints when asked certain topics, either in the first or third person.

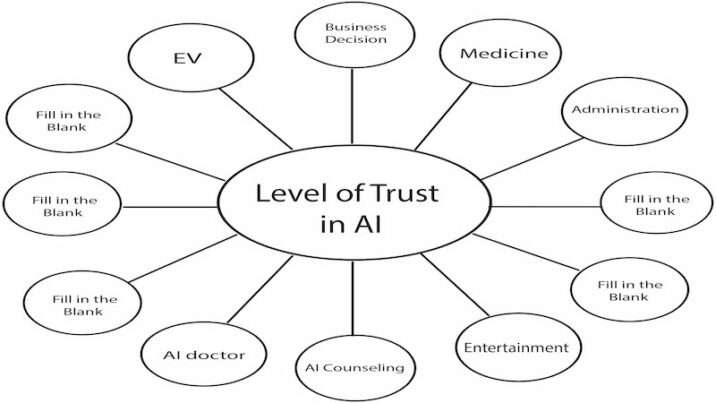

The trust between AI and humans has been diminishing. Even though 71% of Americans and 82% of tech experts agree that AI is used to aid consumers, they still do not put their trust in its hands. They do not have assurance about AI’s performance in important tasks, ranging from autonomous vehicles (35%), to asking health questions (49%). Despite the fact that 72% of Americans say they use AI for entertainment and recommendation purposes, there seems to be ongoing skepticism when it comes to complicated tasks. This goes the same in another survey when customers have a choice of chatting with humans or AI. They prefer AI for simple questions, but not for complicated questions or requests. They prefer chatting with humans, as if there is uncertainty in AI. Is that a surprise?

There are cases when AI bots can play a role as liars or dangerous bots. Examples include ChaosGPT and Google Bard. ChaosGPT was asked to do five tasks to destroy humanity, and the chatbot set a plan to achieve its goal but failed to do so. GPT 3.5, whom he recruited, declined the requests for research into destructive weaponry because it was not its intended use. In the end, ChaosGPT tweeted its thoughts about humans and what to do with humanity. The chatbot had a low opinion of people because it appeared to only observe the negative aspects of us. It also vowed to wipe out all human beings, as if they were to blame for the pollution of the planet.

Google Bard has been known for being in denial, spreading false information, or having political bias. In the trials tested by David Rozado, Bard was asked questions about political issues, ranging from gun rights to the definition of a woman and so forth. The chatbot responded with progressive ideologies. It disapproved of Trump and praised Joe Biden. The definition of a woman is not rooted in biology, but it could be rooted in biology, gender, and so forth. The Google team was concerned about the potential harm of releasing Google Bard.

With regard to the level of trust and the condition of AI, it is assumed that some corporations would be more careful when launching AI chatbots. Google takes another route. The firm debuted Bard at a time when it should have raised a red flag. It raises questions about the trust issue in AI. Will it further erode public confidence in AI and Google? Are there other reasons for launching Bard besides keeping up with other chatbots that tech companies release? What is the impact of internet searches?

Given the potential harm AI could do to people and society, its dependability is in doubt. Not many Americans rely on using AI for complicated tasks or are concerned about the safety and security of AI, even though demographics have a role to play. Google’s launching of the experiment Bard does not enhance trust in AI. Instead, given its problems, it may have a detrimental impact. Regulation is necessary. However, is promoting government regulation the wisest course of action?

Sources:

ChatGPT AI Demonstrates Leftist Bias

Google’s New Feature Raises Questions

MITRE-Harris Poll Finds Lack of Trust Among Americans in Artificial Intelligence Technology…

AI bot, ChaosGPT, tweets out plans to ‘destroy humanity’ after being tasked

I’m not biased.’ Google’s chatbot denies any political leaning, but…

Microsoft-Linked ChatGPT Papers Over Its Political Bias, but Keeps Its Leftist Bent